Joe Biden’s campaign was prepared to go all out after tonight’s debate against Donald Trump.

Instead, they found themselves cornered.

The main representatives of the campaign ended up trapped at one end of the debate room by a mob of journalists.s on Thursday night, answering questions about removing Biden, 81, from the top of the ticket and whether tonight’s performance spurred more concerns about his suitability for office.

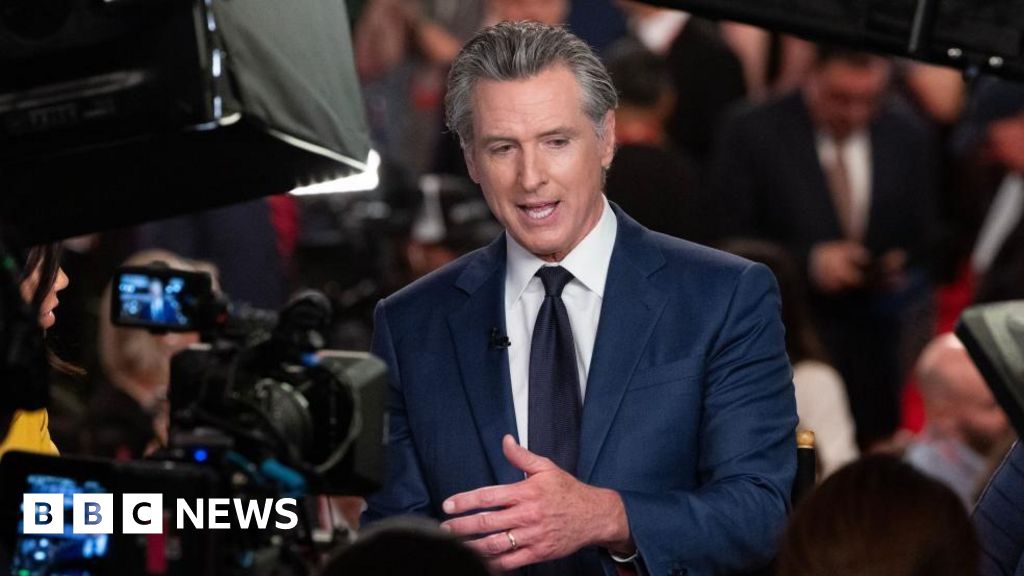

California Governor Gavin Newsom was asked whether the Democratic Party should replace the president as its candidate.

The 56-year-old Democrat responded that he was “old-fashioned” and cared more about the “substance and facts” at issue than the frenzy over Biden’s energy.

It wasn’t the conversation Democrats were hoping to have tonight, but Biden’s subdued performance during the 90-minute event, during which he sometimes He stumbled over his answers and spoke in a cold-induced harsh tone.It caused immediate panic among Democrats as reporters pressed on how his campaign would recover.

Voter concerns about his age were already weighing heavily on the debate, and even Biden’s staunchest supporters acknowledged that his performance was unlikely to help.

David Plouffe, a Democratic strategist who ran Barack Obama’s 2008 campaign, called it “a Defcon 1 moment,” referring to the U.S. military’s term for the highest level of nuclear threat.

“Tonight it felt like there was a 30-year age gap between them,” he said of the two candidates, who are less than four years apart. “And I think that’s what voters are really going to have to deal with after this.”

Andrew Yang, who challenged Biden in the 2020 Democratic primary and dropped out early in the race, wrote in X that the president should “do the right thing” by “stepping aside and letting the Democratic National Committee choose another candidate.” . He added the hashtag #swapJoeout.

Biden is unlikely to be replaced as the Democratic Party’s nominee for several reasons: He is the incumbent president, there are only a few months left before the election, and the chaotic process of choosing another candidate could derail the party’s chances of winning the election. White House in November.

However, the debate was “an important reminder of why, after saving democracy and defeating Trump, we have to end the gerontocracy,” Amanda Litman, who works to recruit young Democratic candidates, told the BBC.

“I think his job just got a little bit harder,” David Axelrod, another top Obama lieutenant, told CNN.

Back in the press room, campaign representatives answered question after question about Biden’s performance. No matter how hard they tried, they couldn’t change the conversation.

Rep. Robert Garcia of California told reporters that Trump “lied, and lied, and lied again.”

The former president made misleading statements during the debate. He falsely claimed that Democratic-controlled states wanted to allow abortion “after birth,” an argument used by anti-abortion activists.

He also said Biden “encouraged” Russian President Vladimir Putin to attack Ukraine, when in reality the Biden administration has strongly supported Ukraine in the war.

The Biden campaign raised similar talking points.

“Donald Trump is a liar and a criminal. And he cannot be our president,” the campaign said in a statement after the debate.

Vice President Kamala Harris echoed the attack. “Donald Trump lied over and over again,” she told CNN.

Appearing at a debate viewing party, Biden focused on this argument.

“They’re going to check out all the things he said,” the president told the crowd. “I can’t think of anything he said that was true.”

“Look, we’re going to beat this guy, we’ve got to beat him. I need you to beat him. You’re the people I’m running for,” Biden added.

Later, in the press room, Trump’s allies and campaign staff joyfully declared victory for their party leader.

Meanwhile, Democrats like Newsom, Garcia and Sen. Raphael Warnock made their appearances relatively brief, after answering the same questions over and over about Biden’s performance.

“I’ve been a representative for a few presidential candidates in my time,” former Democratic Sen. Claire McCaskill told MSNBC. “When you’re a representative, you have to focus on the positive,” she said.

But tonight, she said, she had to be “really honest.”

“He had one thing to do, and that was to convince America that he was up to the job at his age. And tonight he failed in his attempt.”